Introduction

Financial institutions are facing a new generation of fraud driven by deepfakes. Once a fringe concern, synthetic media has become one of the fastest-growing threats in banking, fintech, and crypto. This blog explores the surge in deepfake-enabled fraud, real-world cases, regulatory developments, and how financial institutions can strengthen their defenses in 2025.

Key Takeaways

- Deepfake-related fraud caused more than $410M in losses in the first half of 2025 alone.

- Financial services face a 2,137% rise in deepfake fraud attempts since 2022, with each incident costing up to $680K.

- Major cases, such as the $193M Hong Kong fraud ring, show deepfakes being used at scale in KYC processes.

- Regulators worldwide, from FinCEN to NYDFS, are issuing new deepfake detection and compliance guidelines.

- Layered AI-powered detection, transparency, and employee training are now essential to defend against synthetic identity fraud.

The $193 Million Question: Why Banks Can’t Stop Deepfake Fraud

The call center agent verified the customer’s voice. Caller ID matched. Security questions checked out.

The wire transfer was approved.

Three days later, the real account holder reported fraud. The money was gone—washed through international accounts. The voice on the first call? A deepfake clone so convincing that even voice biometrics validated it.

This isn’t hypothetical. It’s the pattern emerging across financial institutions as deepfake tech accelerates from curiosity to the fastest-growing fraud vector in banking.

The Numbers You Can’t Ignore

- $410M lost in H1 2025—already more than all of 2024. Cumulative losses since 2019 approach $900M, and the trendline is still rising.

- 2,137% surge in deepfake fraud attempts over the last three years.

- Deepfakes now represent ~6.5% of detected fraud attempts; when they land, average loss ≈ $500k, with peaks >$680k.

The headline stats are stark, but the tactics behind them are the real story—and the real risk.

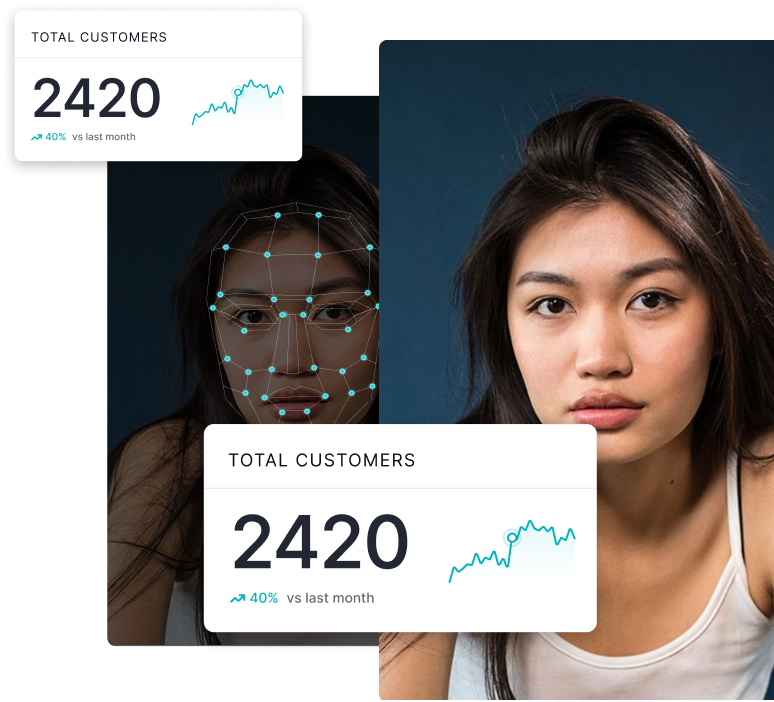

When Identity Verification Becomes the Attack Surface

Banks built digital transformation on a simple premise: verify at onboarding → trust thereafter.

Deepfakes shattered that model.

Case in point: In early 2025, Hong Kong police disrupted a deepfake-driven ring that opened accounts at scale—$193M in losses—by merging fraudsters’ faces with stolen IDs to bypass facial recognition. With 21 stolen HKIDs, the group filed 44 applications; 30 succeeded, enabling laundering and credit abuse. Once “verified,” they were trusted—and every downstream control assumed legitimacy.

The core problem: Most defenses focus on transactions, while breaches occur at onboarding. A synthetic identity that passes KYC looks normal until the bust-out.

Why Financial Services Is Uniquely Exposed

- High trust + high automation

Banking runs on verified identity and delegated authority. Deepfakes target that foundation, and automation reduces human “sense-checking.” - Expanding attack surface

Onboarding, call centers, wire approvals, account recovery, P2P flows—every identity checkpoint is now a content-integrity problem. - Delayed visibility

Synthetic identities can operate for months. There’s no “real” victim to complain, and anomaly models see normal behavior—because the account is fraudulent from inception.

Traditional tools catch transactions that look wrong. Deepfakes make people look right.

Hotspots & Patterns

- Regional spikes: Singapore (+1,500% in 2024), Hong Kong (+1,900%), North America (+1,700% YoY 2022→2023). Organized networks target high-penetration digital markets with penetrable verification.

- Sector split:

- Banks: voice-led call-center impersonation, wire approvals.

- Fintechs: synthetic IDs at onboarding; P2P impersonation.

- Crypto: KYC and account creation as primary breach points.

Wherever UX streamlines verification, fraudsters bring tools to match.

How the Attacks Actually Work (Today)

- Voice cloning from 3 seconds of audio—lifted from earnings calls, podcasts, LinkedIn. Good enough to fool biometrics and humans.

- Synthetic identities (“Frankenstein” personas) blending deepfaked faces, forged docs, and real PII—undetected by classic ID theft monitoring.

- Multimodal orchestration—video selfie + voice check + document set—each reinforcing the other across disconnected systems.

Point-in-time checks fail because adversaries coordinate across layers.

Regulators Are Moving

- FinCEN (late 2024): deepfake red flags—identity photo inconsistencies, suspicious webcam plugins, MFA bypass attempts.

- NYDFS: deepfake detection should be part of baseline cyber programs.

- ECB / Bank of Italy: public warnings on impersonation.

- MAS (Sept 2025): best practices for mitigating deepfake risk across FS.

Translation: This is now systemic risk—and an expectation, not an optional enhancement.

The Arms Race—and What Actually Works

Detection is improving (micro-movement analysis, voice frequency anomalies, digital artifacting), but attackers iterate fast with access to the same AI.

What works in practice:

Detection embedded at the verification layer + explainable outputs so analysts act quickly without drowning in alerts or adding friction for real customers.

Example: bunq (EU neobank) integrated explainable deepfake detection into KYC, achieving >90% accuracy and ~6× reduction in manual review time—without adding friction for legitimate users. The win wasn’t just better models; it was right-layer integration and human-readable reasons for every flag.

Why This Wave Is Different

Past waves targeted process or technology. Deepfakes target perception.

When a voice sounds exactly like your CEO and a selfie looks perfect—what do you investigate? The fraud lives in the content. Humans are wired to trust it; old tools were never built to parse it.

This is why “spot-the-fake” training won’t cut it. You need systems that can analyze what humans can’t—and they must sit everywhere identity is verified.

The Tightrope: Security vs. Seamless UX

- Add too much friction: conversion drops, support bogs down.

- Add too little: losses grow—and trust erodes.

Leaders avoid the false choice by deploying high-accuracy, explainable detection that runs invisibly—flagging only true risks and speeding legitimate customers.

I

f Finance Gets This Wrong

Losses will climb. More importantly, trust in digital banking will erode.

Digital finance assumes institutions can verify who you are—and that this verification holds. If customers believe anyone can fake onboarding, the value prop of digital banking collapses.

We’re not there. But regulators, boards, and fraud teams are treating deepfakes as a now problem for a reason.

How DuckDuckGoose AI Helps Financial Services Stay Ahead

We help IDV providers, banks, neobanks, crypto platforms, and fintechs close the gap with explainable AI that slots into your existing stack.

What We Detect

- Real-time analysis of images, video, and audio for synthetic media & manipulations

- Multimodal signals to catch coordinated (audio + video + docs) attacks

- Synthetic identity patterns traditional KYC misses

Why Teams Choose Us

- Explainable results—analysts see why content was flagged (no black boxes)

- Lower false positives → less friction, faster decisions

- Compliance-ready architecture aligned with FinCEN, NYDFS, ECB, MAS

- Seamless integration into onboarding, KYC, AML, fraud ops—no re-architecture

Proven Impact

- bunq: 90%+ detection accuracy, ~600% reduction in manual review time, zero added friction for real customers.

[Read the full bunq case study →]

Protect the foundation of digital trust: proving the person on the other side of the screen is real—in real time, with reasons your team and regulators can trust.

Secure Onboarding Now

.webp)