Introduction

Deepfakes have moved beyond social media experiments and into the core of business risk. Using AI to generate hyper-realistic videos and voices, attackers can now impersonate executives, manipulate media, and undermine trust in seconds. This blog explores what deepfakes are, the impact on businesses, and what steps organizations can take to protect themselves in 2025.

Key Takeaways

- Deepfakes are synthetic media generated with AI that can convincingly mimic real people.

- The number of deepfake incidents surged 250% year-over-year, with losses nearing $900M in 2025 alone.

- Financial services, healthcare, and technology are among the most targeted industries.

- Deepfakes are eroding public trust, 77% of consumers now demand AI transparency from brands.

- Businesses need layered defenses combining detection tools, employee training, and crisis response plans.

What Are Deepfakes?

The Business Threat Hiding in Plain Sight

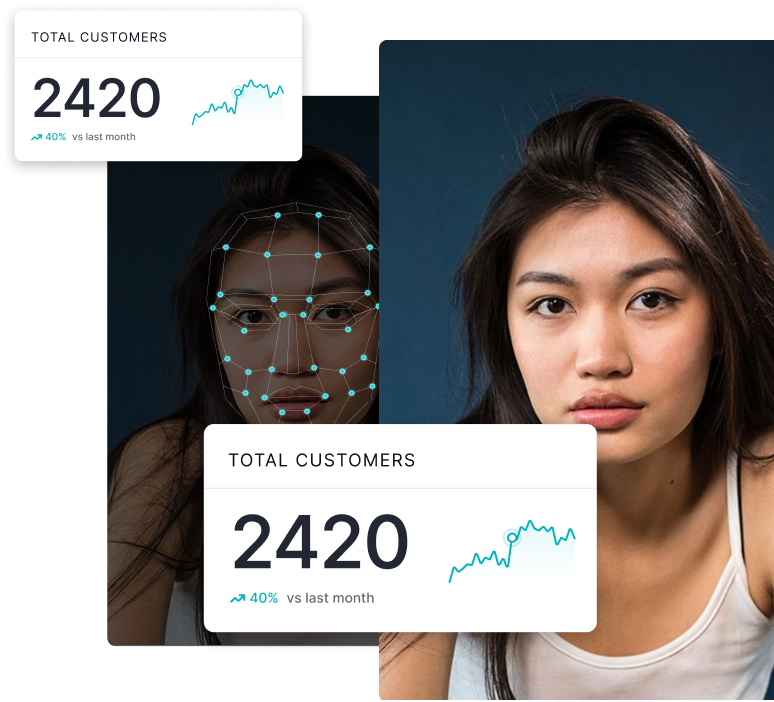

A deepfake is synthetic media created with AI to convincingly replicate someone’s face, voice, or mannerisms. Unlike basic photo edits or voice mimicry, deepfakes are trained on massive datasets and learn the subtle cues that make a person recognizable—how their mouth shapes sounds, the timbre of their voice, even signature gestures and expressions.

The result?

A customer service agent who looks and sounds exactly like your employee—but isn’t. A vendor “verification” video that sails through biometric checks despite being entirely synthetic. A medical image indicating a condition that never existed.

If this sounds futuristic, you’re already behind. Deepfakes have moved from research labs into criminal toolkits, and they’re being used against businesses now—with the pace accelerating every month.

The Numbers Tell an Urgent Story

- Incidents are exploding. In Q1 2025 alone, 179 deepfake-related incidents were recorded—already surpassing the total for all of 2024 (Surfshark).

- Volume is skyrocketing. Estimated deepfake files jumped from ~500,000 in 2023 to ~8 million in 2025. Voice cloning surged throughout 2024 and continues to accelerate.

- An industrial supply chain is forming. MarketsandMarkets projects the deepfake AI market to grow from $857M (2025) to $7.2B (2031) (42.8% CAGR). While some growth reflects legitimate uses, a meaningful slice fuels crime.

- Losses are mounting. Nearly $900M has already been lost to deepfake fraud worldwide, with $400M+ disappearing in the first half of 2025 alone. Analysts forecast $40B in generative-AI-linked fraud losses by 2027.

- Incident severity is high. The average business lost ~$500,000 per deepfake incident (Keepnet).

Why Your Industry Is Probably Already a Target

Financial services & fintech

Fintechs report average losses of $637k per incident vs $570k for traditional banks (The Paypers). Criminals use facial morphing and voice cloning to defeat biometric KYC and launder funds via synthetic identities.

Healthcare

Peer-reviewed work has shown GAN-generated medical images can convincingly insert or remove tumors in CT scans—misleading both clinicians and diagnostic AI. The implications for treatment, insurance fraud, and liability are profound.

Technology, law enforcement, and crypto

Sectors with high-value data and critical infrastructure face elevated attack rates.

Reality check: No sector is immune. What demanded specialized hardware and expertise three years ago can now be done with consumer-grade tools—or even a $50 darknet subscription.

The Damage Goes Beyond the Balance Sheet

Financial losses are measurable. Reputational damage compounds.

- Market impact. A fabricated video of an executive or a fake recall announcement can wipe millions from market cap within hours. Even after debunking, trust lags recovery by months or years.

- Consumer trust is brittle. Nearly three-quarters of consumers say they’re reluctant to buy unless they trust both the content and the company behind it. In Vercara’s survey, 75% of U.S. consumers would stop purchasing from a brand after a cyber incident.

- Internal friction rises. Employees begin second-guessing voice/video instructions from leadership. When teams don’t trust a CEO’s video call, operational velocity suffers

Transparency Is Now a Competitive Requirement

Consumers are encountering deepfakes—and they’re adapting. In 2024, over a quarter of people reported seeing a deepfake scam, and nearly one in ten fell victim (Surfshark). A 2025 Baringa survey found 77% want brands to disclose AI-generated content—and that disclosure directly affects trust and purchase decisions.

Bottom line: Proactive transparency about AI use and deepfake defenses is becoming a market differentiator.

The Preparedness Gap Is Wider Than You Think

Despite the growth in attacks:

- 80% of businesses lack formal deepfake response protocols (Tech.co, 2024).

- Over half of executives say employees have no training to recognize or respond to deepfake scams.

That’s not just a security gap—it’s a competitive liability. While some firms debate whether deepfakes are a “real” threat, others are already updating verification workflows and deploying detection.

What Action Actually Looks Like

1) Treat it as a current risk—not a future possibility

Q1 2025 exceeded all of 2024 for incidents. The threat is accelerating, not emerging.

2) Layer AI detection where it matters

Integrate deepfake detection at KYC, onboarding, voice authentication, video verification, and document checks. Awareness training and manual review alone won’t keep pace.

3) Make explainability non-negotiable

Black-box detections cause bottlenecks and compliance headaches. Your teams need human-readable reasons for flags to act quickly; auditors need traceable logic.

4) Train for verification, not “spot-the-fake”

Shift culture to “verify before you trust.” Use out-of-band callbacks for financial instructions, multi-channel verification for sensitive requests, and time delays for high-value actions.

5) Build and rehearse a crisis plan

If a fabricated CEO video goes viral, who speaks, on what channels, with what evidence? Prepare communications templates, legal workflows, and rapid forensic validation now.

6) Use regulation as momentum

The EU AI Act drives transparency and risk management in biometric verification; DORA mandates operational resilience; FinCEN has flagged deepfake indicators. These are executive levers to fund the controls you need anyway.

The Window Is Closing

Early adopters of deepfake defenses gain a structural advantage. Late movers will shoulder outsized losses and then race to meet the new baseline. Implementing detection and verification today places you ahead of tomorrow’s standard—and reduces immediate fraud exposure.

This isn’t about whether you’ll encounter deepfake fraud. It’s about whether you’ll have defenses in place when it happens.

How DuckDuckGoose AI Closes the Gap

We build explainable deepfake detection for businesses that need to act now.

What We Do

- Real-time detection across images, video, and audio, embedded at KYC, onboarding, and verification checkpoints.

- Explainable outputs that show why content was flagged—enabling confident, fast decisions.

- Seamless integration with existing KYC/AML/IDV workflows—no friction for legitimate users.

- Compliance-ready architecture aligned with EU AI Act, GDPR, and FinCEN guidance from day one.

Why Organizations Choose Us

- Fewer false positives through analyst-friendly context—not just raw alerts.

- Faster response times because detections include human-readable reasoning.

- Future-proof compliance via transparent, auditable outputs.

- Plug-in deployment to your current stack—no disruptive re-architecture.

Who We Help

- Identity verification providers securing digital onboarding.

- Financial institutions blocking synthetic identity fraud and BEC-style deepfake attacks.

- Fintech companies safeguarding high-value transactions and account access.

- Any organization where trust and verification are mission-critical.

The deepfake threat is moving faster than most defenses. We help you close the gap—before it costs you another $500,000 incident.

Audit Your Verification Flow

Run a free readiness check to see where deepfakes can bypass your KYC today.

.webp)

.png)